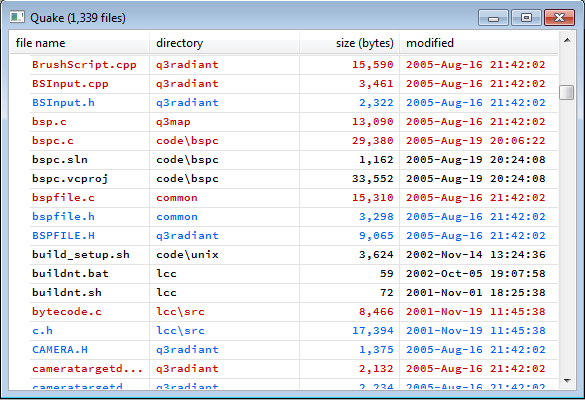

I like to have a personal project that I use to explore different programming ideas – my current personal project is an editor. A couple of features I am adding require the use of a list – for example, the editor is working on a certain set of files and I want to be able to see the files, perhaps sorted by file name:

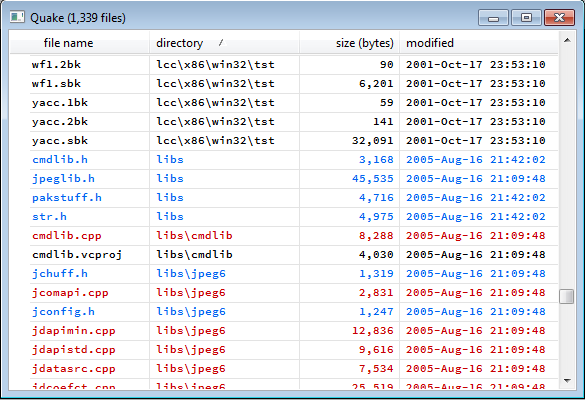

Perhaps sorted by directory:

This is completely typical list control behaviour – click on a column header to sort on that column and scroll through the list to see every element in its correct place. Of course I want this to be highly responsive, and I also want to be able to have many millions of elements in the list – I probably won’t ever have a project with a million files in it but I might well have a million symbols.

I am using a virtual list control – the list control itself does not have most of the data for the list. It knows how many elements are in the list and which block of elements it is displaying, then it calls back into my code to get the text for the elements it is displaying. The list control does not have to deal with millions of elements, it just has to deal with the elements it is currently displaying.

For my definition of “responsive” I am going to use Jakob Nielsen’s values from his article Response Times: The 3 Important Limits:

< 0.1 second for that instantaneous feeling

< 1 second to avoid interrupting the user’s flow of thought

< 10 seconds to avoid losing the user’s attention altogether.

Useful definitions

For this example we have a very simple struct containing file metadata, and a comparator class that gives us an ordering of FileMetaData objects. I have left out the details of the comparator class, but we can tell it to compare on filename, directory, size or last write time.

struct FileMetaData

{

std::string fileName_;

std::string directory_;

std::size_t size_;

std::time_t lastWriteTime_;

};

class Comparator

{

public:

bool operator()(

FileMetaData const& a,

FileMetaData const& b )

}

There are also a few typedefs that will come in useful:

typedef std::vector< FileMetaData > FMDVector;

typedef FMDVector::iterator Iter;

typedef FMDVector::reverse_iterator ReverseIter;

typedef FMDVector::size_type UInt;

The problem

When we click on a column header the list should be resorted on the data in that column. We should be able to scroll through the list and see every list element in the correct place.

Solution #0

Since we need to sort the elements in the list why not just use std::sort? The code is simple and obvious:

void

sortAllElements(

Comparator const& comparator,

FMDVector& files )

{

std::sort(

std::begin( files ),

std::end( files ),

comparator );

}

(I know that the sort barely deserves to be inside its own function but it’ll make it consistent with our more complicated sorting later).

Whenever we click on a column header we update the comparator and call sortAllElements. The code is simple and it works.

The catch comes when we look at performance. Here are the times to sort different numbers of elements:

| # elements | sort all |

|---|---|

| 1,000 | 0.000s |

| 10,000 | 0.000s |

| 100,000 | 0.187s |

| 1,000,000 | 2.480s |

| 10,000,000 | 33.134s |

The timings look great up to 10,000, even sorting 100,000 elements is acceptable. Once we get into the millions though the numbers get much worse. We really don’t want to be waiting around for 2 seconds, let alone 30+ seconds.

The C++ standard tells us that std::sort has a complexity of O( n * log n ). As we have just seen, we get good results with small numbers of elements, but much worse results as n gets larger.

So the straightforward sort works well for smaller numbers of elements, but we are going to need something smarter for millions of elements. There are several possibilities:

- Offload some of the work onto separate threads – even if we didn’t speed up the overall sort time we would at least avoid locking up the main UI thread.

- Use previous knowledge of the ordering to avoid having to do a complete re-sort.

- Keep multiple copies of the list, each sorted by a different column so that the right one can be instantly swapped in.

For the purposes of this blog post I am going to avoid #1 because threading opens up its own can of worms. I will declare that #2 is invalid because we don’t have any previous knowledge (or at least we have to have a solution that works responsively even if we don’t have any previous knowledge of the ordering). I am not going to consider #3 because I don’t want to make that particular speed / space tradeoff.

Instead I am going to take my cue from the virtual list control. It only needs to know about the elements it is displaying – those are the only elements that need to be in sorted order.

The problem has now moved from “sort the entire list” to “sort that portion of the list that is displayed”. If we can always guarantee that the portion of the list that is displayed is sorted correctly, the user will never know the difference. This has the potential to be much quicker – the number of displayed elements is relatively small. I can get around 70 lines on my monitor, let’s round that up to 100 – we need to get the 100 displayed elements correctly sorted and in their right place.

Solution #1

We’ll start with a straightforward case, the 100 elements we want to display are right at the beginning of the list (quite possibly the case when the list is first displayed). The standard library supplies us with many more sorting related algorithms than std::sort, and for this case it gives us std::partial_sort. As the name suggests, it partially sorts the list – we tell it how much of the beginning of the list we want it to sort:

void

sortInitialElements(

UInt k,

Comparator const& comparator,

FMDVector& files )

{

std::partial_sort(

std::begin( files ),

std::begin( files ) + k,

std::end( files ),

comparator );

}

(There is no error handling in the function itself – I am assuming that the caller has ensured the values being passed in are within range.)

We have an additional parameter – k, the number of elements we want sorted correctly. We use k to create an iterator that we pass to std::partial_sort.

According to the standard, std::partial_sort has a complexity of O( n * log k ). Since k is likely to be much smaller than n (we’re assuming that k is 100) we should get better performance:

| # elements | sort all | sort initial |

|---|---|---|

| 1,000 | 0.000s | 0.000s |

| 10,000 | 0.000s | 0.000s |

| 100,000 | 0.187s | 0.000s |

| 1,000,000 | 2.480s | 0.047s |

| 10,000,000 | 33.134s | 0.406s |

This is looking much better. We are still in the instantaneous category at one million elements and even though 10 million is pushing us to around half a second it is still a vast improvement over sorting the entire list.

Moving on, it’s all very well being able to sort the beginning of the list correctly but we also want to be able to scroll through the list. We want to be able to display any arbitrary range of the list correctly.

Solution #2

Once again, the standard provides us with a useful algorithm. The function std::nth_element lets us put the nth element of a list into its correct place, and also partitions the list so that all of the elements before the nth element come before that element in sort order, and all of the elements after come after the nth element in sort order.

So, we can use std::nth_element to get the element at the beginning of the range into the correct place, then all we have to do is sort the k elements afterwards to get our range sorted. The code looks like this:

void

sortElementsInRange(

UInt firstElementIndex,

UInt k,

Comparator const& comparator,

FMDVector& files )

{

std::nth_element(

std::begin( files ),

std::begin( files ) + firstElementIndex,

std::end( files ),

comparator );

std::partial_sort(

std::begin( files ) + firstElementIndex,

std::begin( files ) + firstElementIndex + k,

std::end( files ),

comparator );

}

and the performance data:

| # elements | sort all | sort initial | sort range |

|---|---|---|---|

| 1,000 | 0.000s | 0.000s | 0.000s |

| 10,000 | 0.000s | 0.000s | 0.016s |

| 100,000 | 0.187s | 0.000s | 0.016s |

| 1,000,000 | 2.480s | 0.047s | 0.203s |

| 10,000,000 | 33.134s | 0.406s | 2.340s |

A million elements pushes us outside the “instantaneous” limit, but still acceptable. Unfortunately, 10 million elements isn’t great.

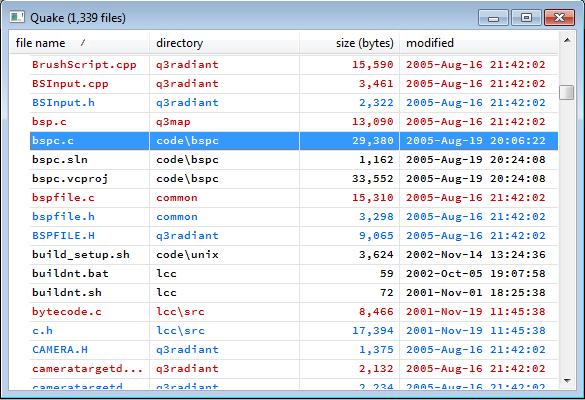

There is one more feature of sorting lists that I love. I use it in my email all the time. By default, my email is sorted by date – I want to see the most recent emails first. Sometimes though I’ll be looking at an email and want to see other emails from that sender. When that happens, I can click on the “sender” column header and have the email I currently have selected stay in the same place while the rest of the list sorts itself around the selected email. The selected element acts like a pivot and the rest of the lists moves around the pivot.

Mouse over this image to see an example of this sort on our list of files:

Solution #3

WARNING – the code I am about to present does not work under all circumstances. I want to focus on the sorting algorithms so for the time being I am not going to clutter the code up with all of the checks needed to make it general. DO NOT USE THIS CODE. I will write a follow up blog post presenting a correct version of this function with all of the checks in place.

Sorting around a pivot element is a more complicated function than the others so I’ll walk through it step by step.

void

sortAroundPivotElementBasic(

UInt nDisplayedElements,

UInt topElementIndex,

UInt pivotElementIndex,

Comparator const& comparator,

UInt& newTopElementIndex,

UInt& newPivotElementIndex,

FMDVector& files )

nDisplayedElementsThe number of elements displayed on screen.topElementIndexThe index of the top displayed element of the list.pivotElementIndexThe index of the pivot element.comparatorSame as the other sort functions – the object that lets us order the list.newTopElementIndexAn output parameter – the index of the top element after sorting.newPivotElementIndexThe index of the pivot element after sorting.filesThe list of files we are sorting.

Our first job is to find out the new position of the pivot element. Previously we had used std::nth_element to partition the range, this time, since we actually know the value of the element, we’ll use std::partition:

FileMetaData const pivotElement(

files[ pivotElementIndex ] );

Iter pivotElementIterator(

std::partition(

std::begin( files ),

std::end( files ),

std::bind( comparator, _1, pivotElement ) ) );

Notice that although we are using the same comparator we have used for all of our sort operations we are binding the pivot element to the comparator’s second argument. std::partition expects a unary predicate – it moves all elements for which the predicate returns true to the front, and all elements for which the predicate returns false to the back. It then returns an iterator corresponding to the point between the two partitions. By binding our pivot element to the second parameter of comparator we get a unary predicate that returns true if an element is less than the pivot element and false otherwise.

We know where the pivot element ends up in the list, and we also know where it ends up on the display – we want it to stay in the same position on the display. This means we can work out the range of elements that are displayed.

UInt const pivotOffset(

pivotElementIndex - topElementIndex );

Iter displayBegin( pivotElementIterator - pivotOffset );

Iter displayEnd( displayBegin + nDisplayedElements );

Now we can fill in two of our return values.

newTopElementIndex = displayBegin - std::begin( files ); newPivotElementIndex = pivotElementIterator - std::begin( files );

To recap, we have two iterators that tell us the range of elements that are displayed – displayBegin and displayEnd. We have a third iterator that tells us where the pivot element has ended up – pivotElementIterator. We also know that the elements have been partitioned – we have two partitions, each with all the right elements, but in the wrong order. The boundary between those two partitions is the location of the pivot element.

We’ve used std::partial_sort a couple of times already, we can use it again to sort the bottom section of the display:

std::partial_sort( pivotElementIterator, displayEnd, std::end( files ), comparator );

Of course we also want to sort the top section of the display. All we need is a version of std::partial_sort that will sort the end of a list, not the beginning. The standard library doesn’t supply us with that algorithm, but it does give us a way of reversing the list. If we reverse the list above the pivot element we can use std::partition. We do this using reverse iterators:

std::partial_sort( ReverseIter( pivotElementIterator ), ReverseIter( displayBegin ), ReverseIter( std::begin( files ) ), std::bind( comparator, _2, _1 ) );

Reverse iterators are easily constructed from the forward iterators that we already have, and they plug right into algorithms just like regular iterators do. The beginning of our reversed list is at the pivot element, the end is at std::begin( files ) and the element we are sorting to is the top of the display. Notice that we had to reverse our comparator as well (and notice that reversing the comparator does not involve applying operator ! to its output, we actually need to reverse the order of the arguments).

Here’s the function in one block:

void

sortAroundPivotElementBasic(

UInt nDisplayedElements,

UInt topElementIndex,

UInt pivotElementIndex,

Comparator const& comparator,

UInt& newTopElementIndex,

UInt& newPivotElementIndex,

FMDVector& files )

{

FileMetaData const pivotElement(

files[ pivotElementIndex ] );

Iter pivotElementIterator(

std::partition(

std::begin( files ),

std::end( files ),

std::bind( comparator, _1, pivotElement ) ) );

UInt const pivotOffset(

pivotElementIndex - topElementIndex );

Iter displayBegin( pivotElementIterator - pivotOffset );

Iter displayEnd( displayBegin + nDisplayedElements );

newTopElementIndex = displayBegin - std::begin( files );

newPivotElementIndex =

pivotElementIterator - std::begin( files );

std::partial_sort(

pivotElementIterator,

displayEnd,

std::end( files ),

comparator );

std::partial_sort(

ReverseIter( pivotElementIterator ),

ReverseIter( displayBegin ),

ReverseIter( std::begin( files ) ),

std::bind( comparator, _2, _1 ) );

}

And the performance?

| # elements | sort all | sort initial | sort range | sort around pivot |

|---|---|---|---|---|

| 1,000 | 0.000s | 0.000s | 0.000s | 0.000s |

| 10,000 | 0.000s | 0.000s | 0.016s | 0.000s |

| 100,000 | 0.187s | 0.000s | 0.016s | 0.016s |

| 1,000,000 | 2.480s | 0.047s | 0.203s | 0.094s |

| 10,000,000 | 33.134s | 0.406s | 2.340s | 1.061s |

Pretty darn good, in fact better than sorting a given range. Again, once we get up to ten million elements we lose responsiveness, I think the lesson here is that once we get over one million elements we need to find some other techniques.

I want to repeat my warning from above. The code I have just presented for the pivot sort fails in some cases (and fails in an “illegal memory access” way, not just a “gives the wrong answer” way). I will write a follow up post that fixes these problems.

Wrap up

We have seen that with a little work, and some standard library algorithms, we can do distinctly better than sorting the entire list, we can increase the number of elements by 2 orders of magnitude and still get reasonable performance. Extremely large numbers of elements still give us problems though.

Is this the best we can do? It’s the best I’ve been able to come up with – if you have something better please post it in the comments, or send me a link to where you describe it.

One last thought. Earlier on I dismissed option #2 – “Use previous knowledge of the ordering to avoid having to do a complete re-sort.” by saying that I wanted algorithms that would work without prior knowledge. We now have those algorithms, and once we have applied any of them we will have some knowledge of the ordering. For example, once we have sorted a given range we can divide the data set into 3 – the first block of data has all the right elements, just in the wrong order, the second block has all of the right elements in the right order and the third block has all of the right elements in the wrong order. We can use this information as we’re scrolling through the list to make updates quicker.

I think one second to sort 10 million items is pretty darn good – I mean sure, it would be nice if everything were instantaneous, but do your users really need to sort 10M-item lists often enough that 1 second is a problem?

On the other hand, I wish we had anything close to your dedication to performance around here – the fact that it takes about 15 seconds just to log in (something every user does at least once per day, usually more than once) isn’t even a blip on the radar of those in charge of development priorities.

This is indeed a difficult problem to solve, and I like your solution. I think it would be hard to beat.

When I started reading your post, my first instinct (before I read your solution) was not to mutate the original vector, but to have a new container which would lazily produce sorted values on demand. This would be useful if, for example, you have two such windows open, and each could be sorted independently, while still sharing the same unsorted data because it’s represented through the sorted lazy “view” container. This is an orthogonal issue to the performance, but still an interesting thought.

My second thought is that perhaps a list of 10-million items might not fit the user requirements exactly, whether or not it’s sorted. If a project is that big, then perhaps a “list of files” is the wrong tool for the job (of finding a particular file, I presume). I don’t know exactly what the “right tool for the job” would be – it depends on the specific use cases that the window is targeted at fulfilling. Perhaps it would involve an aggressive filtering process before any sorting. The general idea is that perhaps there would be a way of improving the performance simply by changing the requirements, while still meeting the user’s needs. This is, of course, a non-solution in terms of “how do we do xyz fastest in C++”, but something I would seriously consider if I encountered this in the wild and it turned out to be a real problem.

If the 10-million-item-list is indeed the correct solution (which it probably is, since that’s what you’ve chosen), and the worst-case performance is deemed unacceptable, then perhaps a few sorted indexes would fix the problem, and be a compromise between a keeping multiple copies of the list and keeping a single copy which gets reordered. Of course it wouldn’t be such an elegant solution as yours, and would introduce its own problems, and perhaps errs on the wrong side of your size-space trade-off considerations, but it would certainly give you blazing performance.

I think the idea of a sorted list being the wrong tool for 10 million elements is a good one. I don’t yet have a use case for 10 million elements, and if I get one it won’t be filenames (my record so far is 600,000 files and I am only getting that by combining 55 open source projects – an unrealistic use case). Perhaps if I come up with a genuine use case for 10 million elements I’ll write another blog post about it.

If it really has to be a 10 million element sorted list I think you’re right, a collection of indices would be necessary and I would just have to deal with the memory usage.

If sorting of 10M records is a regular task, then IMO this should be done by a properly indexed database (which internally can do all kinds of optimizations…)

Even there I’d say it’s pretty hard to beat 1 second…

You’re right. People build careers and Phd theses on making this stuff work well – I am being optimistic that I can ride in with a couple of algorithms and get blazing performance on enormous data sets. I found the exercise of pushing something way past reasonable limits to be interesting though.