In the first part of this series we looked at a number of ways of sorting the displayed elements without having to sort every item in a (possibly) large list. The final “pivot sort” kept the position of the currently selected element fixed on the display while the rest of the list sorted around it. The code was relatively simple – all of the heavy lifting was done by the standard library with one call to std::partition and two calls to std::partial_sort. There is only one problem – the function doesn’t work for a number of cases.

We can work out what these cases are in a number of ways. One is simply to run a large number of tests – always a good idea when dealing with code like this, however, without having thought through the problem and the special cases we can’t guarantee that we are testing all of the relevant cases. So, let’s look at the original problem statement and see what special cases we can find.

We never had an “official” statement of the problem, but this will do:

Keep the position of the currently selected element fixed on the display while the rest of the list sorts around it.

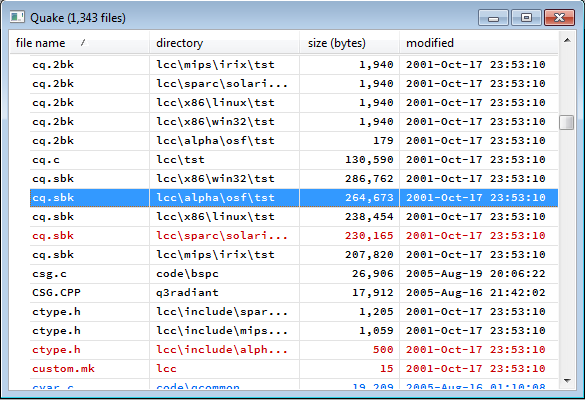

Are there any circumstances under which we cannot achieve this goal, or are there any circumstances where we can achieve this goal but it will lead to odd results? Here’s one problem case, we keep the selected element in its same place on the display but we end up with several blank spaces at the top of the display. We have achieved our goal, but we are not making the most of the display area (mouse over the image to see the before and after pictures):

We can also imagine a similar problem occurring at the end of the list. These “blanks” can occur for any length list if the selected element ends up close enough to the beginning or end of the list, however if the length of the list is smaller than the number of elements we display this problem is going to occur all of the time. There is also an implicit assumption I’ve been making all along that the selected element is actually on the display when we start to sort. If the element isn’t on display we can apply the same rules (the selected element remains in its same position relative to the display and everything else sorts around it) but it isn’t obvious to me that we’ll get useful results from doing that.

These are exactly the sort of cases we’d expect to need to test – values that are large or small or near some boundary. Several of these cases indicate areas where we need to make a decision – do we allow the list to display fewer elements than it can to keep the selected element in the correct place? What is the right behaviour if the selected element is off the display before the sort starts?

For the purposes of this post I am going to state the the algorithm must always display as many elements as possible (i.e. there will be no blanks on the display unless the total number of elements is less than the number we can display, and even then there must be as few blanks as possible). I will also state that if the selected element is off the top of the display it will end up in the top position on the display, and if the selected element is off the bottom of the display it will end up in the bottom position on the display.

There is another approach we can take that is helpful for this problem. Work our way through the code and see what places in the code might give us unexpected results.

void

sortAroundPivotElementBasic(

UInt nDisplayedElements,

UInt topElementIndex,

UInt pivotElementIndex,

Comparator const& comparator,

UInt& newTopElementIndex,

UInt& newPivotElementIndex,

FMDVector& files )

{

FileMetaData const pivotElement(

files[ pivotElementIndex ] );

Iter pivotElementIterator(

std::partition(

std::begin( files ),

std::end( files ),

std::bind( comparator, _1, pivotElement ) ) );

The first couple of statements are good (I am assuming that nobody will call the code with pivotElementIndex outside the range of the file vector – if we want to guard against that we can easily add preconditions). Things start to get hairy with the next statement though:

UInt const pivotOffset(

pivotElementIndex - topElementIndex );

We are subtracting two unsigned integer values. If pivotElementIndex is smaller than topElementIndex, the result of the subtraction is a very large positive value that is almost certainly unexpected and unwanted. If pivotElementIndex is smaller than topElementIndex it means that the pivot element was above the top of the display – one of the problem cases we have just identified.

Iter displayBegin( pivotElementIterator - pivotOffset );

We know we can’t trust pivotOffset – it might be some extremely large number, however even if pivotOffset has a reasonable value, we do not know for certain that pivotElementIterator - pivotOffset will produce a legal iterator (i.e. one in the range files.begin() to files.end()). Notice that this matches another of the problem cases we identified above – the top element on the display actually comes before the first element in the vector leaving blank lines on the display.

Iter displayEnd( displayBegin + nDisplayedElements );

displayBegin is already suspect, and even if displayBegin is within range we have no guarantee that displayBegin + nDisplayedElements will be in range. displayEnd might end up being past the end of the vector – the “blanks at the end of the list” problem.

newTopElementIndex = displayBegin - std::begin( files ); newPivotElementIndex = pivotElementIterator - std::begin( files );

There is nothing wrong with these two lines if displayBegin and pivotElementIterator are in range. pivotElementIterator is fine, however as we have just seen, displayBegin may well be out of range.

std::partial_sort( pivotElementIterator, displayEnd, std::end( files ), comparator );

We know that pivotElementIterator is good, but displayEnd is suspect.

std::partial_sort( ReverseIter( pivotElementIterator ), ReverseIter( displayBegin ), ReverseIter( std::begin( files ) ), std::bind( comparator, _2, _1 ) ); }

Again, pivotElementIterator is good, but displayBegin is suspect.

There are three iterators that we need to know to perform this sort:

pivotElementIteratordisplayBegindisplayEnd

We have seen that pivotElementIterator is easy to get correct, the problem is with the other two iterators. This is what I came up with to get correct values for displayBegin and displayEnd.

void

sortAroundPivotElement(

UInt nDisplayedElements,

UInt topElementIndex,

UInt pivotElementIndex,

Comparator const& comparator,

UInt& newTopElementIndex,

UInt& newPivotElementIndex,

FMDVector& files )

{

FileMetaData const pivotElement(

files[ pivotElementIndex ] );

Iter const newPivotElementIterator(

std::partition(

std::begin( files ),

std::end( files ),

std::bind( comparator, _1, pivotElement ) ) );

The first two lines stay the same – the only possible errors here can be avoided by the caller.

newPivotElementIndex =

newPivotElementIterator - std::begin( files );

The calculation of newPivotElementIndex hasn’t changed, I have just moved it to earlier in the function because I am going to make use of it.

pivotElementIndex = boost::algorithm::clamp(

pivotElementIndex,

topElementIndex,

topElementIndex + nDisplayedElements - 1 );

We fix the problem of the pivot element being off the display by clamping the pivot element’s position to the limits of the display. We do this after we get the value of the pivot element. (Notice that since boost::algorithm::clamp is effectively a combination of std::min and std::max we have to use - 1 for our “end of range” calculation).

UInt pivotOffset( pivotElementIndex - topElementIndex );

Since we have clamped the value of pivotElementIndex we know that the subtraction will not overflow – pivotOffset has a legal value.

pivotOffset = std::min( pivotOffset, newPivotElementIndex );

We clamp pivotOffset to make sure that we won’t run off the beginning of the vector.

Iter displayBegin(

newPivotElementIterator - pivotOffset );

newTopElementIndex = displayBegin - std::begin( files );

We know for sure that displayBegin is a valid iterator since we know that pivotOffset is in a legal range.

nDisplayedElements = std::min(

nDisplayedElements,

files.size() );

Solves any problems caused by having a list shorter than the size of our display.

Iter const displayEnd(

files.begin() +

std::min(

newTopElementIndex + nDisplayedElements,

files.size() ) );

displayEnd is now in the correct place, and a legal iterator. Now we can use displayEnd to recalculate displayBegin (yes, we have to calculate displayBegin twice).

displayBegin = std::min( displayEnd - nDisplayedElements, displayBegin ); newTopElementIndex = displayBegin - std::begin( files );

Finally all of our iterators are correct and we can sort the elements.

std::partial_sort( newPivotElementIterator, displayEnd, std::end( files ), comparator ); std::partial_sort( ReverseIter( newPivotElementIterator ), ReverseIter( displayBegin ), ReverseIter( std::begin( files ) ), std::bind( comparator, _2, _1 ) ); }

Here’s the function in a single block for clarity:

void

sortAroundPivotElement(

UInt nDisplayedElements,

UInt topElementIndex,

UInt pivotElementIndex,

Comparator const& comparator,

UInt& newTopElementIndex,

UInt& newPivotElementIndex,

FMDVector& files )

{

FileMetaData const pivotElement(

files[ pivotElementIndex ] );

Iter const newPivotElementIterator(

std::partition(

std::begin( files ),

std::end( files ),

std::bind( comparator, _1, pivotElement ) ) );

newPivotElementIndex =

newPivotElementIterator - std::begin( files );

pivotElementIndex = boost::algorithm::clamp(

pivotElementIndex,

topElementIndex,

topElementIndex + nDisplayedElements - 1 );

UInt pivotOffset( pivotElementIndex - topElementIndex );

pivotOffset = std::min( pivotOffset, newPivotElementIndex );

Iter displayBegin(

newPivotElementIterator - pivotOffset );

newTopElementIndex = displayBegin - std::begin( files );

nDisplayedElements = std::min(

nDisplayedElements,

files.size() );

Iter const displayEnd(

files.begin() +

std::min(

newTopElementIndex + nDisplayedElements,

files.size() ) );

displayBegin = std::min(

displayEnd - nDisplayedElements,

displayBegin );

newTopElementIndex = displayBegin - std::begin( files );

std::partial_sort(

newPivotElementIterator,

displayEnd,

std::end( files ),

comparator );

std::partial_sort(

ReverseIter( newPivotElementIterator ),

ReverseIter( displayBegin ),

ReverseIter( std::begin( files ) ),

std::bind( comparator, _2, _1 ) );

}

I quite like my original function – sortAroundPivotElementBasic. I don’t like the new function nearly as much. Of course, sortAroundPivotElement has the advantage that it works (not something to dismiss lightly) but it lacks Tony Hoare’s quality of being “so simple that there are obviously no deficiencies”. I believe that the code is correct. I have done extensive testing on the function. I worked my way through all of the places in the code where things might be incorrect and made sure that I was doing appropriate checks. Even with all of that I am not convinced of my ability to explain the code correctly (I have done my best with the explanation above but I don’t think that my explanation is perfect).

Of course one possibility is that I am not as good at reading, writing and explaining code as I should be, but even though that’s true, it’s still worth looking at ways to improve sortAroundPivotElement.

The function now obviously splits into three sections (that was always the case, it just wasn’t as clear with the basic version):

- Calculate the new position of the pivot element

- Calculate

displayBeginanddisplayEnd. - Sort the elements on screen

The calculation of displayBegin and displayEnd is an obvious step to split out into its own function. That would at least keep the main function closer to the core parts of the algorithm, and keep all of the special case handling in a separate function.

I can think of various ways to handle the special cases separately (if the number of elements in the vector is smaller than the number we can display we might just as well sort the entire vector; if the new position of the pivot element is close enough to the beginning or end of the vector to cause a problem we could just use std::partial sort on the beginning or end) but they add the overhead of splitting out the special cases.

Maybe I’ll eventually come up with a better way of calculating displayBegin and displayEnd, in the meantime, we have a function that works.

Unsigned integer variables

I made a conscious decision when I wrote this code to avoid integer conversions (implicit or explicit) as much as possible. That lead to my basic integer type being an unsigned type:

typedef std::vector< FileMetaData > FMDVector;

typedef FMDVector::size_type UInt;

I was able to achieve my goal – there are no implicit or explicit integer conversions. I have a working function where every integer in use is the same unsigned type. Surely this is a good thing?

The basic problem with unsigned integer types is that the model they provide breaks down when you try to set them to a negative value, whether directly or as the result of an operation (such as the subtraction in sortAroundPivotElementBasic) that could overflow.

This has been discussed more than once on comp.lang.c++.moderated:

I am opposed to using unsigned integers for arithmetic (rather than bit array) purposes. Every time I have tried to use unsigned integers for quantities that must always be positive (for example, the width and height of a rectangle in a 2D graphics system), I inevitably end up needing to subtract them, and all of a sudden I have overflow problems.

I managed to make an “all unsigned all the time” policy work with sortAroundPivotElement. I am slightly surprised about that because during development I had several problems with overflow. It took some time to come up with a solution where overflow was not a problem.

Even though I managed to get a working solution, I would still change the unsigned ints for signed ints if I was putting this into production code. The function as it stands is fragile. I managed to come up with a solution without overflow, however if someone (and this includes me) has to change the function at a later date (e.g. because we want the special cases to be handled differently) they will have to do a bunch more work to maintain that property (and might fail under circumstances that will, of course, elude all testing and only manifest themselves when a potential customer is evaluating the software).

C++11 makes this easy for us by giving us an easy way to make a signed type out of the corresponding unsigned type:

typedef std::make_signed< UInt >::type Int;

Of course then we will have to convert between integer types since std::vector::size returns an unsigned type. We can moan about this, but it isn’t going to change.

Imagine the user shrinks the window so that the height is less than one slot. If that leads to nDisplayedElements being 0, the boost::algorithm::clamp becomes a no-op (with unsigned types) or will assert (with signed types). The no-op behavior is fine, since the function itself should be a no-op if nDisplayedElements is zero.

I agree that unsigned integer types are almost universally a bad idea for anything subject to general arithmetic. However, I think they make perfect sense for indexes.

* There are very few cases where you need general arithmetic with indexes.

* Unsigned integer overflow is well defined (so bugs will be reproducible) as opposed to signed integer overflow which gets you into undefined behavior (things may appear to work because the implementation does the obvious two’s complement thing but you’re unknowingly relying on UB). If a bug makes it to production code, I’d rather it have well-defined behavior.

* The STL containers all have unsigned size types. Trying to compare signed indexes to unsigned sizes is fraught with peril. Even if you carefully use std::make_signed, you’ve introduced a distinct limit that’s different than the one your container handles. That’s more test cases you probably don’t even realize you need.

* Telling programmers not to use unsigned types inevitably leads to `int size = vec.size();`, which not only changes the signedness of the quantity, but often drops a huge chunk of range (e.g., on Windows, which is LLP64, int is a 32-bit signed integer while std::size_t is a 64-bit unsigned integer, so good luck processing a 3GB std::string).

And while you might be able to prove that all the cases where assigning and comparing an unsigned size to a signed index are OK (because your sizes never get that large), it requires disabling multiple compiler warnings that might help you spot real bugs in other parts of the project. I view any style guideline that forces me disable compiler warnings as a bug. I’d much rather double-check my subtractions.

I did not take that case into account – thank you for pointing it out. I would probably fix this by putting a precondition on the function and not calling it at all, but it could also be fixed in the code itself. I think the fact that this works with unsigned types is a coincidence rather than an argument in favour of unsigneds.

I don’t know how to judge the number of cases across the industry as a whole, but I have certainly needed to subtract one index from another and I have used general arithmetic to produce indices.

This has never been an issue for me (which of course says nothing about other people’s experience). I rarely deal with values in the range of +-2^31, and when I do I have needed to make sure that I use 64 bit values consistently. If 31 or 32 bits isn’t enough for what you’re doing you already have to make sure that you’re using an appropriately sized integer. How many cases are there where 31 bits isn’t enough but 32 bits always is? (I genuinely don’t know, I am curious whether there are any examples out there). My feeling is that if that is the case you have then you have to know what you’re doing and be very careful about the types you are using. I am sure there are cases where you absolutely have to use unsigned types, but I think if you’re in that situation you need to be very careful about using (or not using) int.

I haven’t needed to disable any compiler warnings. I use explicit conversions. For the type of code I write (and that’s an important qualification, I don’t deal with Big Data) that works.

I have never managed to do that reliably. Quite possibly a failing on my part.

Many of the difficulties with the interaction of signed and unsigned values in C and C++ stems from the fact that the when compilers ignored overflow, unsigned values would generally behave like members of an abstract algebraic ring, and types which behaved as members of an abstract algebraic ring were useful for many purposes, but no rigid distinctions were made between types that were used to represent numbers and types which were used represent algebraic rings. Nearly all of the confusion stemming from “unsigned” types would be solved if languages had separate “non-negative number” and “algebraic ring” types (numbers of any size should implicitly convert to rings of any size, and rings of any size should implicitly convert to *smaller* rings; conversion of a ring to a member of a larger domain should require explicit specification of whether it should use the member of the larger domain which is closer to zero, or the first one which can be reached in the positive direction).

As it is, unsigned types mostly behave like rings, but they’re not consistent about it. For example, rings whose type would be smaller than `int` are routinely replaced with numbers, even when this could cause Undefined Behavior (e.g. on a machine with a 64-bit `int`, multiplying 3000000000u by 3000000000u would cause UB).

I wonder if it would be possible in C++ without too much overhead to define types which would behave consistently like rings and types which would behave consistently like non-negative *numbers*? Numeric literals would be a pain, in much the way string literals are, but it might be possible to have numbers obey the usual rules of arithmetic in ways they presently don’t (e.g. have -1 compare smaller an an “non-negative number” 2). Unfortunately, the only sane way I can think of to handle subtraction of non-negative numbers would be to either specify a trap in case of overflow, or have the result of the subtraction be the next larger integer type [I wouldn’t bother with having an unsigned number type of the largest size].

The discussion about signed and unsigned types is fascinating. In terms of safety, I think the correct logical solution would be if C++ were designed such that results of arithmetic operators more correctly express their range. For example:

– Addition of 2 unsigned 16 bit integers results in an unsigned 17 bit integer

– Subtraction of 2 unsigned 16 bit integers results in a signed 17 bit integer

– etc

Then you define that integers can be assigned only to variables with a range at least as large, unless truncated by an explicit cast. Using these language rules you would be statically guaranteed never to have an overflow except where you explicitly cast, and mixing unsigned and signed becomes a non-issue. But in response the developer would have to take more care about explicitly defining the ranges that accepted for a variable. If you intend your list to have up to 100 million items, you would use a 27 bit unsigned integer instead of a 32 bit signed or unsigned integer, so that your resulting calculations don’t land up taking 33 or 34 bits. This is all stuff you would have had to work out on paper anyway if you want to prove that there’s no overflows in your program, so making it explicit shouldn’t annoy anybody. (yeah right!)

These arguments apply only to integers. Bit sets and “algebraic rings” are different concepts entirely and should have correspondingly different types (as John Payson was talking about).